Content under Creative Commons Attribution license CC-BY 4.0, code under BSD 3-Clause License © 2016 L.A. Barba, N.C. Clementi, G.F. Forsyth. Based on https://github.com/numerical-mooc/numerical-mooc/blob/master/lessons/05_relax/05_01_2D.Laplace.Equation.ipynb also under CC-BY and MIT licenses, 2015.

Relax and hold steady

Many problems in physics have no time dependence, yet are rich with physical meaning: the gravitational field produced by a massive object, the electrostatic potential of a charge distribution, the displacement of a stretched membrane and the steady flow of fluid through a porous medium ... all these can be modeled by Poisson's equation:

where the unknown and the known are functions of space, in a domain . To find the solution, we require boundary conditions. These could be Dirichlet boundary conditions, specifying the value of the solution on the boundary,

or Neumann boundary conditions, specifying the normal derivative of the solution on the boundary,

A boundary-value problem consists of finding , given the above information. Numerically, we can do this using relaxation methods, which start with an initial guess for and then iterate towards the solution. Let's find out how!

Laplace's equation

The particular case of (homogeneous case) results in Laplace's equation:

For example, the equation for steady, two-dimensional heat conduction is:

where is a temperature that has reached steady state. The Laplace equation models the equilibrium state of a system under the supplied boundary conditions.

The study of solutions to Laplace's equation is called potential theory, and the solutions themselves are often potential fields. Let's use from now on to represent our generic dependent variable, and write Laplace's equation again (in two dimensions):

Like in the diffusion equation, we discretize the second-order derivatives with central differences. If you need to refresh your mind, check out this lesson and try to discretize the equation by yourself. On a two-dimensional Cartesian grid, it gives:

When , we end up with the following equation:

This tells us that the Laplacian differential operator at grid point can be evaluated discretely using the value of at that point (with a factor ) and the four neighboring points to the left and right, above and below grid point .

The stencil of the discrete Laplacian operator is shown in Figure 1. It is typically called the five-point stencil, for obvious reasons.

Figure 1: Laplace five-point stencil.

The discrete equation above is valid for every interior point in the domain. If we write the equations for all interior points, we have a linear system of algebraic equations. We could solve the linear system directly (e.g., with Gaussian elimination), but we can be more clever than that!

Notice that the coefficient matrix of such a linear system has mostly zeroes. For a uniform spatial grid, the matrix is block diagonal: it has diagonal blocks that are tridiagonal with on the main diagonal and on two off-center diagonals, and two more diagonals with . All of the other elements are zero. Iterative methods are particularly suited for a system with this structure, and save us from storing all those zeroes.

We will start with an initial guess for the solution, , and use the discrete Laplacian to get an update, , then continue on computing until we're happy. Note that is not a time index here, but an index corresponding to the number of iterations we perform in the relaxation scheme.

At each iteration, we compute updated values in a (hopefully) clever way so that they converge to a set of values satisfying Laplace's equation. The system will reach equilibrium only as the number of iterations tends to , but we can approximate the equilibrium state by iterating until the change between one iteration and the next is very small.

The most intuitive method of iterative solution is known as the Jacobi method, in which the values at the grid points are replaced by the corresponding weighted averages:

This method does indeed converge to the solution of Laplace's equation. Thank you Professor Jacobi!

Challenge task

Grab a piece of paper and write out the coefficient matrix for a discretization with 7 grid points in the direction (5 interior points) and 5 points in the direction (3 interior). The system should have 15 unknowns, and the coefficient matrix three diagonal blocks. Assume prescribed Dirichlet boundary conditions on all sides (not necessarily zero).

Boundary conditions and relaxation

Suppose we want to model steady-state heat transfer on (say) a computer chip with one side insulated (zero Neumann BC), two sides held at a fixed temperature (Dirichlet condition) and one side touching a component that has a sinusoidal distribution of temperature. We would need to solve Laplace's equation with boundary conditions like

We'll take and for the sizes of the domain in the and directions.

One of the defining features of elliptic PDEs is that they are "driven" by the boundary conditions. In the iterative solution of Laplace's equation, boundary conditions are set and the solution relaxes from an initial guess to join the boundaries together smoothly, given those conditions. Our initial guess will be everywhere. Now, let's relax!

First, we import our usual smattering of libraries (plus a few new ones!)

from matplotlib import pyplot

import numpy

%matplotlib inline

from matplotlib import rcParams

rcParams['font.family'] = 'serif'

rcParams['font.size'] = 16

To visualize 2D data, we can use pyplot.imshow(), but a 3D plot can sometimes show a more intuitive view the solution. Or it's just prettier!

Be sure to enjoy the many examples of 3D plots in the mplot3d section of the Matplotlib Gallery.

We'll import the Axes3D library from Matplotlib and also grab the cm package, which provides different colormaps for visualizing plots.

from mpl_toolkits.mplot3d import Axes3D

from matplotlib import cm

Let's define a function for setting up our plotting environment, to avoid repeating this set-up over and over again. It will save us some typing.

def plot_3D(x, y, p):

'''Creates 3D plot with appropriate limits and viewing angle

Parameters:

----------

x: array of float

nodal coordinates in x

y: array of float

nodal coordinates in y

p: 2D array of float

calculated potential field

'''

fig = pyplot.figure(figsize=(11,7), dpi=100)

ax = fig.gca(projection='3d')

X,Y = numpy.meshgrid(x,y)

surf = ax.plot_surface(X,Y,p[:], rstride=1, cstride=1, cmap=cm.viridis,

linewidth=0, antialiased=False)

ax.set_xlim(0,1)

ax.set_ylim(0,1)

ax.set_xlabel('$x$')

ax.set_ylabel('$y$')

ax.set_zlabel('$z$')

ax.view_init(30,45)

Note

This plotting function uses Viridis, a new (and awesome) colormap available in Matplotlib versions 1.5 and greater. If you see an error when you try to plot using cm.viridis, just update Matplotlib using conda or pip.

Analytical solution

The Laplace equation with the boundary conditions listed above has an analytical solution, given by

where and are the length of the domain in the and directions, respectively.

We will use numpy.meshgrid to plot our 2D solutions. This is a function that takes two vectors (x and y, say) and returns two 2D arrays of and coordinates that we then use to create the contour plot. Always useful, linspace creates 1-row arrays of equally spaced numbers: it helps for defining and axes in line plots, but now we want the analytical solution plotted for every point in our domain. To do this, we'll use in the analytical solution the 2D arrays generated by numpy.meshgrid.

def p_analytical(x, y):

X, Y = numpy.meshgrid(x,y)

p_an = numpy.sinh(1.5*numpy.pi*Y / x[-1]) /\

(numpy.sinh(1.5*numpy.pi*y[-1]/x[-1]))*numpy.sin(1.5*numpy.pi*X/x[-1])

return p_an

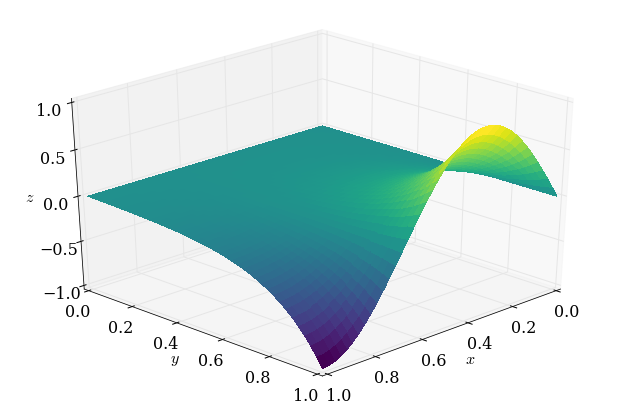

Ok, let's try out the analytical solution and use it to test the plot_3D function we wrote above.

nx = 41

ny = 41

x = numpy.linspace(0,1,nx)

y = numpy.linspace(0,1,ny)

p_an = p_analytical(x,y)

plot_3D(x,y,p_an)

It worked! This is what the solution should look like when we're 'done' relaxing. (And isn't viridis a cool colormap?)

How long do we iterate?

We noted above that there is no time dependence in the Laplace equation. So it doesn't make a lot of sense to use a for loop with nt iterations.

Instead, we can use a while loop that continues to iteratively apply the relaxation scheme until the difference between two successive iterations is small enough.

But how small is small enough? That's a good question. We'll try to work that out as we go along.

To compare two successive potential fields, a good option is to use the L2 norm of the difference. It's defined as

But there's one flaw with this formula. We are summing the difference between successive iterations at each point on the grid. So what happens when the grid grows? (For example, if we're refining the grid, for whatever reason.) There will be more grid points to compare and so more contributions to the sum. The norm will be a larger number just because of the grid size!

That doesn't seem right. We'll fix it by normalizing the norm, dividing the above formula by the norm of the potential field at iteration .

For two successive iterations, the relative L2 norm is then calculated as

Our Python code for this calculation is a one-line function:

def L2_error(p, pn):

return numpy.sqrt(numpy.sum((p - pn)**2)/numpy.sum(pn**2))

Now, let's define a function that will apply Jacobi's method for Laplace's equation. Three of the boundaries are Dirichlet boundaries and so we can simply leave them alone. Only the Neumann boundary needs to be explicitly calculated at each iteration, and we'll do it by discretizing the derivative in its first order approximation:

def laplace2d(p, l2_target):

'''Iteratively solves the Laplace equation using the Jacobi method

Parameters:

----------

p: 2D array of float

Initial potential distribution

l2_target: float

target for the difference between consecutive solutions

Returns:

-------

p: 2D array of float

Potential distribution after relaxation

'''

l2norm = 1

pn = numpy.empty_like(p)

while l2norm > l2_target:

pn = p.copy()

p[1:-1,1:-1] = .25 * (pn[1:-1,2:] + pn[1:-1, :-2] \

+ pn[2:, 1:-1] + pn[:-2, 1:-1])

##Neumann B.C. along x = L

p[1:-1, -1] = p[1:-1, -2] # 1st order approx of a derivative

l2norm = L2_error(p, pn)

return p

Rows and columns, and index order

The physical problem has two dimensions, so we also store the temperatures in two dimensions: in a 2D array.

We chose to store it with the coordinates corresponding to the rows of the array and coordinates varying with the columns (this is just a code design decision!). If we are consistent with the stencil formula (with corresponding to index and to index ), then will be stored in array format as p[j,i].

This might be a little confusing as most of us are used to writing coordinates in the format , but our preference is to have the data stored so that it matches the physical orientation of the problem. Then, when we make a plot of the solution, the visualization will make sense to us, with respect to the geometry of our set-up. That's just nicer than to have the plot rotated!

Figure 2: Row-column data storage

As you can see on Figure 2 above, if we want to access the value we would write those coordinates as . You can also see that its location is the 3rd row, 2nd column, so its array address would be p[3,2].

Again, this is a design decision. However you can choose to manipulate and store your data however you like; just remember to be consistent!

Let's relax!

The initial values of the potential field are zero everywhere (initial guess), except at the boundary:

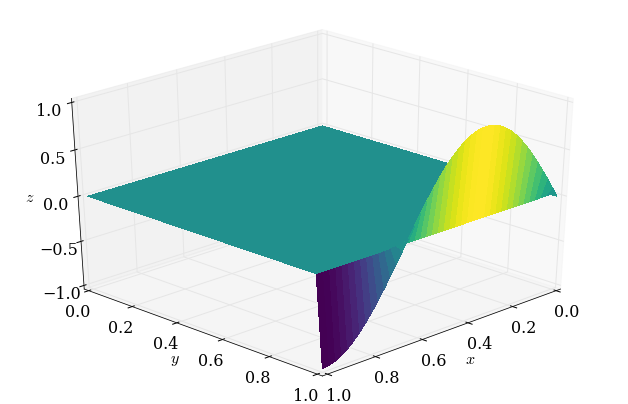

To initialize the domain, numpy.zeros will handle everything except that one Dirichlet condition. Let's do it!

##variable declarations

nx = 41

ny = 41

##initial conditions

p = numpy.zeros((ny,nx)) ##create a XxY vector of 0's

##plotting aids

x = numpy.linspace(0,1,nx)

y = numpy.linspace(0,1,ny)

##Dirichlet boundary conditions

p[-1,:] = numpy.sin(1.5*numpy.pi*x/x[-1])

Now let's visualize the initial conditions using the plot_3D function, just to check we've got it right.

plot_3D(x, y, p)

The p array is equal to zero everywhere, except along the boundary . Hopefully you can see how the relaxed solution and this initial condition are related.

Now, run the iterative solver with a target L2-norm difference between successive iterations of .

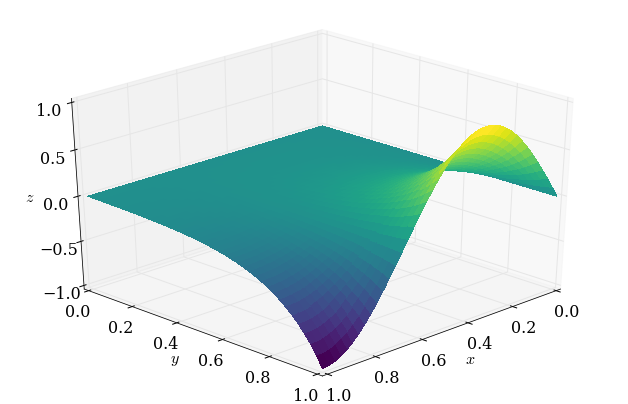

p = laplace2d(p.copy(), 1e-8)

Let's make a gorgeous plot of the final field using the newly minted plot_3D function.

plot_3D(x,y,p)

Awesome! That looks pretty good. But we'll need more than a simple visual check, though. The "eyeball metric" is very forgiving!

Convergence analysis

Convergence, Take 1

We want to make sure that our Jacobi function is working properly. Since we have an analytical solution, what better way than to do a grid-convergence analysis? We will run our solver for several grid sizes and look at how fast the L2 norm of the difference between consecutive iterations decreases.

Let's make our lives easier by writing a function to "reset" the initial guess for each grid so we don't have to keep copying and pasting them.

def laplace_IG(nx):

'''Generates initial guess for Laplace 2D problem for a

given number of grid points (nx) within the domain [0,1]x[0,1]

Parameters:

----------

nx: int

number of grid points in x (and implicitly y) direction

Returns:

-------

p: 2D array of float

Pressure distribution after relaxation

x: array of float

linspace coordinates in x

y: array of float

linspace coordinates in y

'''

##initial conditions

p = numpy.zeros((nx,nx)) ##create a XxY vector of 0's

##plotting aids

x = numpy.linspace(0,1,nx)

y = x

##Dirichlet boundary conditions

p[:,0] = 0

p[0,:] = 0

p[-1,:] = numpy.sin(1.5*numpy.pi*x/x[-1])

return p, x, y

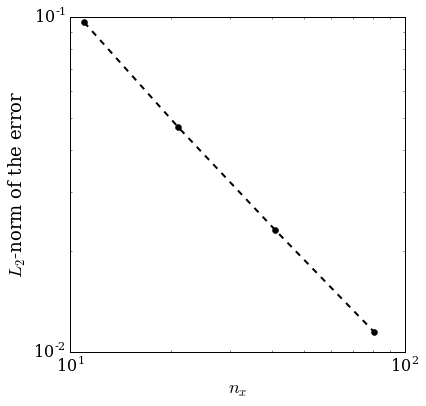

Now run Jacobi's method on the Laplace equation using four different grids, with the same exit criterion of each time. Then, we look at the error versus the grid size in a log-log plot. What do we get?

nx_values = [11, 21, 41, 81]

l2_target = 1e-8

error = numpy.empty_like(nx_values, dtype=numpy.float)

for i, nx in enumerate(nx_values):

p, x, y = laplace_IG(nx)

p = laplace2d(p.copy(), l2_target)

p_an = p_analytical(x, y)

error[i] = L2_error(p, p_an)

pyplot.figure(figsize=(6,6))

pyplot.grid(True)

pyplot.xlabel(r'$n_x$', fontsize=18)

pyplot.ylabel(r'$L_2$-norm of the error', fontsize=18)

pyplot.loglog(nx_values, error, color='k', ls='--', lw=2, marker='o')

pyplot.axis('equal');

Hmm. That doesn't look like 2nd-order convergence, but we're using second-order finite differences. What's going on? The culprit is the boundary conditions. Dirichlet conditions are order-agnostic (a set value is a set value), but the scheme we used for the Neumann boundary condition is 1st-order.

Remember when we said that the boundaries drive the problem? One boundary that's 1st-order completely tanked our spatial convergence. Let's fix it!

2nd-order Neumann BCs

Up to this point, we have used the first-order approximation of a derivative to satisfy Neumann B.C.'s. For a boundary located at this reads,

which, solving for gives us

Using that Neumann condition will limit us to 1st-order convergence. Instead, we can start with a 2nd-order approximation (the central-difference approximation):

That seems problematic, since there is no grid point . But no matter … let's carry on. According to the 2nd-order approximation,

Recall the finite-difference Jacobi equation with :

Notice that the equation relies on the troublesome (nonexistent) point , but according to the equality just above, we have a value we can substitute, namely . Ah! We've completed the 2nd-order Neumann condition:

That's a bit more complicated than the first-order version, but it's relatively straightforward to code.

Note

Do not confuse with p[-1]: p[-1] is a piece of Python code used to refer to the last element of a list or array named p. is a 'ghost' point that describes a position that lies outside the actual domain.

Convergence, Take 2

We can copy the previous Jacobi function and replace only the line implementing the Neumann boundary condition.

Careful!

Remember that our problem has the Neumann boundary located at and not as we assumed in the derivation above.

def laplace2d_neumann(p, l2_target):

'''Iteratively solves the Laplace equation using the Jacobi method

with second-order Neumann boundary conditions

Parameters:

----------

p: 2D array of float

Initial potential distribution

l2_target: float

target for the difference between consecutive solutions

Returns:

-------

p: 2D array of float

Potential distribution after relaxation

'''

l2norm = 1

pn = numpy.empty_like(p)

while l2norm > l2_target:

pn = p.copy()

p[1:-1,1:-1] = .25 * (pn[1:-1,2:] + pn[1:-1, :-2] \

+ pn[2:, 1:-1] + pn[:-2, 1:-1])

##2nd-order Neumann B.C. along x = L

p[1:-1,-1] = .25 * (2*pn[1:-1,-2] + pn[2:, -1] + pn[:-2, -1])

l2norm = L2_error(p, pn)

return p

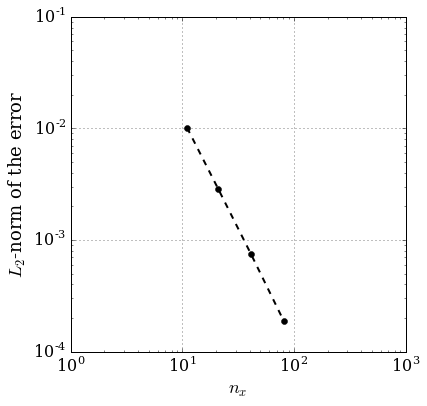

Again, this is the exact same code as before, but now we're running the Jacobi solver with a 2nd-order Neumann boundary condition. Let's do a grid-refinement analysis, and plot the error versus the grid spacing.

nx_values = [11, 21, 41, 81]

l2_target = 1e-8

error = numpy.empty_like(nx_values, dtype=numpy.float)

for i, nx in enumerate(nx_values):

p, x, y = laplace_IG(nx)

p = laplace2d_neumann(p.copy(), l2_target)

p_an = p_analytical(x, y)

error[i] = L2_error(p, p_an)

pyplot.figure(figsize=(6,6))

pyplot.grid(True)

pyplot.xlabel(r'$n_x$', fontsize=18)

pyplot.ylabel(r'$L_2$-norm of the error', fontsize=18)

pyplot.loglog(nx_values, error, color='k', ls='--', lw=2, marker='o')

pyplot.axis('equal');

Nice! That's much better. It might not be exactly 2nd-order, but it's awfully close. (What is "close enough" in regards to observed convergence rates is a thorny question.)

Now, notice from this plot that the error on the finest grid is around . Given this, perhaps we didn't need to continue iterating until a target difference between two solutions of . The spatial accuracy of the finite difference approximation is much worse than that! But we didn't know it ahead of time, did we? That's the "catch 22" of iterative solution of systems arising from discretization of PDEs.

Final word

The Jacobi method is the simplest relaxation scheme to explain and to apply. It is also the worst iterative solver! In practice, it is seldom used on its own as a solver, although it is useful as a smoother with multi-grid methods. There are much better iterative methods! If you are curious you can find them in this lesson.

#Ignore this cell, It simply loads a style for the notebook.

from IPython.core.display import HTML

def css_styling():

try:

styles = open("../styles/custom.css", "r").read()

return HTML(styles)

except:

pass

css_styling()

'], ["\(","\)"] ], displayMath: [ [''], ["\[","\]"] ] }, displayAlign: 'center', // Change this to 'center' to center equations. "HTML-CSS": { styles: {'.MathJax_Display': {"margin": 4}} } });